Cached Eyes

Cached Eyes

“The sentiment against algorithms is almost like xenophobia,” someone recently muttered in a workshop dealing with the so-called “technosphere,” or the complex worldwide technological system fueled by algorithms, imagined on the same scale as the biosphere.

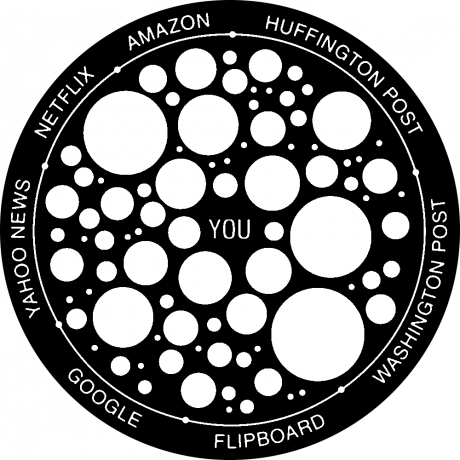

What are these algorithms? And why have they become so detested? The drastic workshop statement unpleasantly crystallizes the comments flourishing on newspaper pages, the chatter on the subway, and the nervous voices of contemporary pessimists who tend to confuse algorithms with enemies. Many seem to take company visions sent out from Silicon Valley for granted: algorithms will render humans obsolete, govern states, optimize economical growth, and handle big amounts of data. Data, which human eyes cannot read and grasp. Or at least certainly not as fast as the algorithm can. Key to the haunting side of this development seems to be algorithms’ invisibility, despite the fact that we are constantly confronted with their effects and witness their transformation of our infoscape. But we do not have to surrender to our algorithmically defined persona, that set of algorithmically selected and delivered opinions and information activist Eli Pariser has dubbed the “filter bubble.”

Algorithms existed long before Google entered the stage. In fact, there would be no computing machine without algorithms. In 1936 Alan Turing invented an abstract machine that introduced our modern notion of algorithms as a theoretical model of a computer hardware or software system. Looking at the history of algorithms, most bear the name of their author(s), a sign of pride and property, for example, Prim’s Algorithm or the Cooley-Tukey algorithm—however, there have been quite a decent amount of re-discoveries and re-expressions of older formulas among twentieth century computing algorithms. Beyond computer scientists and mathematicians, the wider public became interested in the power of algorithms when the debate about Google’s influence was sparked. The company’s success is mostly based on its own algorithm called PageRank which it created in 1998 (it’s a funny coincidence that one of the two authors’ last names is Page and that algorithm ranks web pages).

Media scholar Matteo Pasquinelli criticizes that Google’s economy transforms common intellect into network value. By definition, algorithms are step-by-step sequences of operations that solve specific computational tasks used by search engines, face recognition software, dating apps, visualizations, online transactions, and data compression procedures. Ultimately, the algorithmic domain determines what we see, and even how we see it. Until Google grew big and gained a monopoly, algorithms generally remained unnoticed, since the code was accepted as simply part of the computer. For example, bits of information that a user refers to frequently are kept in what is called a cache. Processors and hard drives have caches. Web browsers have caches. Algorithms are what clean the cache when it fills.

In her talk at transmediale, Hito Steyerl mentioned that vision has become a small part of perceiving how how data circulates, hinting at exactly these algorithmic conditions. Yet according to her emancipatory approach, humans are not doomed to passivity; humans leave all kinds of traces that are not image-based. A portrait of a person today has become a rather minor piece of evidence in comparison with biometric data like fingerprints and DNA traces. Photography is only one aspect of identifying a person today—the evidentiary function of photography has become secondary in the Age of Big Data. According to Steyerl, producing misunderstandings in the information machine is a way to irritate the system and its infrastructural violence.

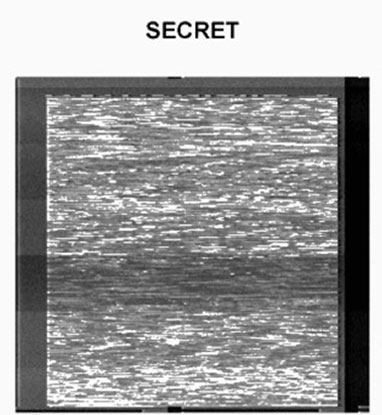

Steyerl proposed an experiential situation to the audience by presenting an image of random dots, of pure visual noise. She used this to demonstrate the experience of apophenia. Apophenia is the recognition of an image in random data that is actually not there. A certain shape reminds you of a familiar object, becoming a phantasm. Steyerl cheekily claimed the non-image she showed to be a “proprietary algorithm, which I have developed.” She then projected a documentary image of the sea to invoke a “mental state” like apophenia, when the brain extracts (from its cache) a familiar pattern from the random visual data of water. Imagining the viewing mode of a machine generates new layers to an image. In Steyerl’s words, the image is an “instrument for active misrecognition.” People don’t see what they are told to see, instead, they use a force of imagination, “generative fiction,” similar to what writer Kathy Acker associated with daydreaming, a method of resisting an enforced image of society.

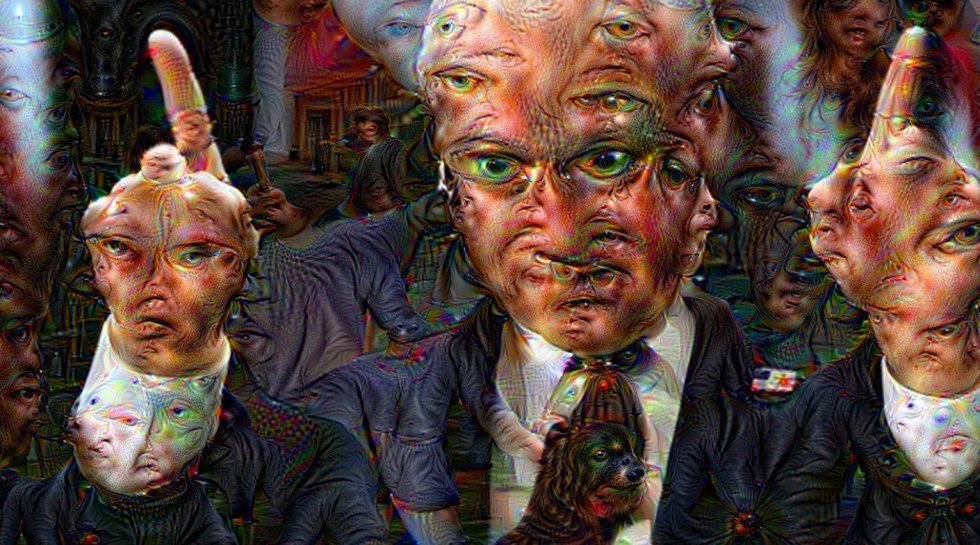

Last summer, Google offered a glimpse into how computers might “see” the world. As Steyerl described, the company launched an image recognition research project called DeepDream, which purported to teach “computers how to see, understand, and appreciate our world” by way of a neural network, but seemed rather to conjure some sort of technological unconscious. This creepy experiment in computer vision was dubbed inceptionism and was inspired by “Mandelbrot sets” whereby an image reveals “progressively ever-finer recursive detail at increasing magnifications” so that fractal self-similarity applies to the entire set, rather than only to parts. Every image presented is first abstracted, and then becomes an entry point to a pluriverse of figures that are in turn abstracted to reveal more figures—an infinite plunge into patterns becoming figures, becoming patterns, and so forth. “Even a relatively simple neural network can be used to over-interpret an image, just like as children we enjoyed watching clouds and interpreting the random shapes.”1

It’s curious to see machines reverting to interpreting clouds—as John Durham Peters has noted, humans have been searching for signs in clouds from time immemorial, and the cloud can be seen as a form of elemental media.2 Have machines now adopted the human tendency towards apophenia, Hito Steyerl asks? A posthuman machine-vision that is simply all too human? What happens to the relationship between figure and ground when, unlike for humans, who focus on the figure, the machine may make no distinction? David Berry speculates, “apophenia would be the norm in a highly digital computational society, perhaps even a significant benefit to one’s life chances and well-being if finding patterns become increasingly lucrative. Here we might consider the growth of computational high-frequency trading and financial systems that are trained and programmed to identify patterns very quickly.”3

In IBM’s brochure The Information Machine, published around 1979, the following is written about the computer: “Intelligent machines have once again been removed from the sphere of the uncanny. Today’s robot, the computer—is now just a “machine.”4 Is this development undergoing yet another reversal today? Are the intelligent machines we know as A.I. and robots again being assigned uncanny properties? On behalf of the company IBM, the seven-part video work Think was created for the 1964 World’s Fair. On seven screens in different sizes, logical processes are performed: gathering information (such as for a database), abstracting of information, setting up a model and then manipulating it. The goal is to give information meaning, for which a model is needed. The multi-channel film features infrastructure such as roads and railway tracks, abstraction in the form of lists and numbers, charts and maps. In a Designer’s Statement IBM announced that “the central idea behind the computer is to elaborate on human-scale acts.”

This machine elaboration is happening. Machines talk to each other without us noticing. Wendy Chun’s play on words “daemonic media” is a term that mediates between tech lingo and mythical or poetic meanings. According to Chun, computers are “inhabited by invisible, orphaned processes that, perhaps like Socrates’ daimonion, help us in our times of need. They make executables magic. UNIX—that operating system seemingly behind our happy spectral Mac desktops—runs daemons; daemons run our email, our web servers. What is not seen becomes daemonic, rather than what is normal, because the user is supposed to be the cause and end of any process.”5

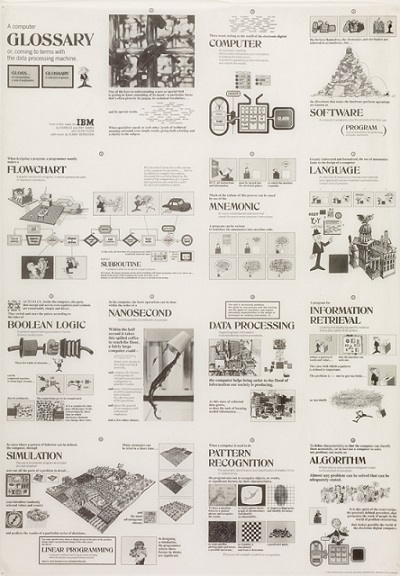

When Charles and Ray Eames introduced IBM computers in the short 1967 film A Computer Glossary, or, coming to terms with the data processing machine, they introduced computers’ infrastructure and operating mode, visible and invisible tasks, mechanisms and programs, such as pattern recognition and the algorithm—the two constituent mathematical processes that are so much more virulently discussed today than back then. Pattern recognition was defined by the Eames as “the automatic identification and classification of shapes, forms or relationships,” and algorithms were explained as “a fixed, step-by-step procedure designed to lead to the solution of a problem.”6 Pragmatic and to the point. Today might be a good moment to return to this pragmatic tone and approach—but in a tongue-in-cheek way, as Steyerl suggested.

Vera Tollmann is a writer. Since 2015, she has been a PhD candidate in the graduate program “Aesthetics of the Virtual” at the Academy of Fine Arts in Hamburg and she holds a position as a research associate at the UDK, Berlin, where, together with Hito Steyerl, Maximilian Schmoetzer, and Boaz Levin, she runs the Research Center for Proxy Politics (RCPP).

Keynote Conversation: Anxious to Act with Hito Steyerl and Nicholas Mirzoeff at transmediale/conversationpiece.

- 1. http://googleresearch.blogspot.de/2015/06/inceptionism-going-deeper-into-neural.html

- 2. John Durham Peters, The Marvelous Clouds: Towards a philosophy of Elemental Media (Chicago, IL: University of Chicago Press, 2015).

- 3. David M. Berry, “The Postdigital Constellation,” Postdigital Aesthetics. Art, Computation and Design, ed. David M. Berry and Michael Dieter (London: Palgrave Macmillan, 2015) p.56.

- 4. Die Informationsmaschine: eine kurze Geschichte, Internationale Büromaschinengesellschaft (IBM) Deutschland, Sindelfingen, ca. 1979.

- 5. Wendy Hui Kyong Chun, Programmed Visions: Software and Memory (The MIT Press: Cambridge/London 2011) p.87.

- 6. Charles and Ray Eames, A Computer Glossary, IBM, film, 1968, color, 10 min 47 sec http://www.eamesoffice.com/the-work/a-computer-glossary-2/